Abstract

The space around the body not only expands the interaction space of a mobile device beyond its small screen, but also enables users to utilize their kinesthetic sense. Therefore, body-centric peephole interaction has gained considerable attention. To support its practical implementation, we propose OddEyeCam, which is a vision-based method that tracks the 3D location of a mobile device in an absolute, wide, and continuous manner with respect to the body of a user in both static and mobile environments. OddEyeCam tracks the body of a user using a wide-view RGB camera and obtains precise depth information using a narrow-view depth camera from a smartphone close to the body. We quantitatively evaluated OddEyeCam through an accuracy test and two user studies. The accuracy test showed the average tracking accuracy of OddEyeCam was 4.17 and 4.47cm in 3D space when a participant is standing and walking, respectively. In the first user study, we implemented various interaction scenarios and observed that OddEyeCam was well received by the participants. In the second user study, we observed that the peephole target acquisition task performed using our system followed Fitts’ law. We also analyzed the performance of OddEyeCam using the obtained measurements and observed that the participants completed the tasks with sufficient speed and accuracy.

Technical Concept

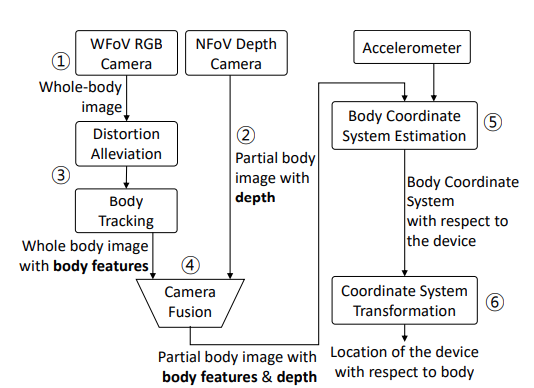

OddEyeCam estimates the 3D location of a smartphone with respect to the body The process is

-

A WFoV (Wide Field of View) RGB camera provides a whole-body image.

-

An RGB-D camera provides partial body depth and RGB image.

-

An distortion-alleviation module reduces the distortion of the fisheye image through an equirectangular projection. A body-tracking algorithm provides body keypoints from the undistorted image.

-

The body keypoints found in the WFoV image can be projected to the NFoV (Near Field of View) depth image by combining two cameras.

-

Using keypoints and depth information, as well as additional gravity vector from an accelerometer, we can estimate the body coordinate system.

-

We can obtain the device location with respect to the body by converting the body position (obtained in Step 5) with respect to the camera.

Hardware Prototype

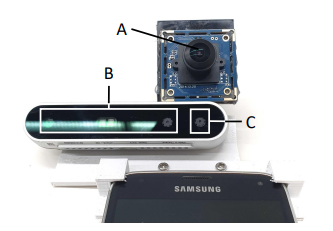

We used a 180° fsheyeeye lens USB camera as the wide field of view RGB camera. Intel RealSense D415 was chosen as the narrow field of view depth camera in our prototype.

Example Applications

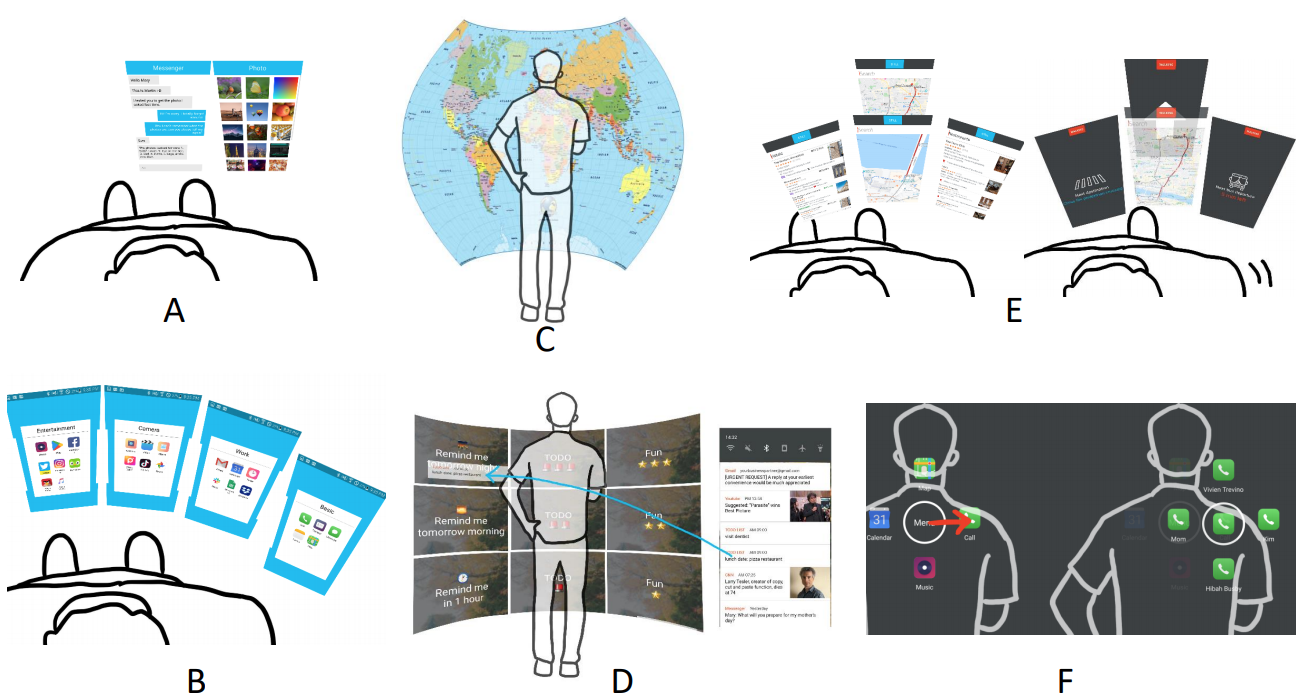

We created several applications to show various design possibilities of OddEyeCam.

- A. Drag and Drop Between Apps

- B. Body-Centric Folder

- C. Large-Image Viewer

- D. One-Hand Tagging

- E. Getting Directions

- F. Marking Menu

For more detail, please see our paper.

Resources

My Contributions

I participated in

- project ideation

- accuracy test design and its software implementation